News

- September, 2020: Web launched.

- September, 2020: Paper available (see Downloads).

- December, 2020: Code and data available (see Downloads).

Abstract

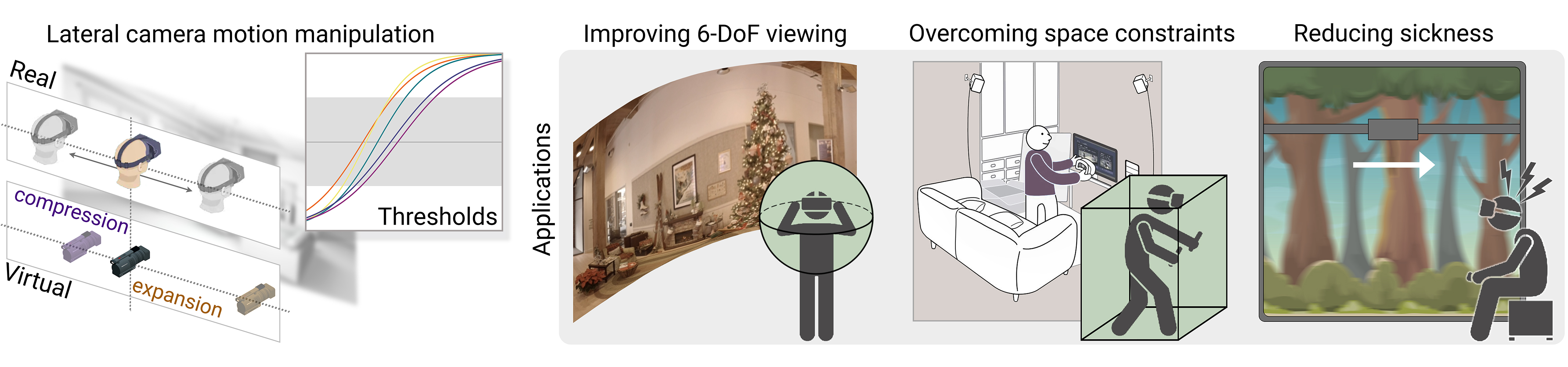

Virtual Reality (VR) systems increase immersion by reproducing users’ move- ments in the real world. However, several works have shown that this real- to-virtual mapping does not need to be precise in order to convey a realistic experience. Being able to alter this mapping has many potential applications, since achieving an accurate real-to-virtual mapping is not always possible due to limitations in the capture or display hardware, or in the physical space available. In this work, we measure detection thresholds for lateral translation gains of virtual camera motion in response to the corresponding head motion under natural viewing, and in the absence of locomotion, so that virtual camera movement can be either compressed or expanded while these manipulations remain undetected. Finally, we propose three applications for our method, addressing three key problems in VR: improving 6-DoF viewing for captured 360º footage, overcoming physical constraints, and reducing simulator sickness. We have further validated our thresholds and evaluated our applications by means of additional user studies confirming that our ma- nipulations remain imperceptible, and showing that (i) compressing virtual camera motion reduces visible artifacts in 6-DoF, hence improving perceived quality, (ii) virtual expansion allows for completion of virtual tasks within a reduced physical space, and (iii) simulator sickness may be alleviated in simple scenarios when our compression method is applied.

Downloads

The code and dataset provided are property of Universidad de Zaragoza - free for non-commercial purposes

Bibtex

Related

Acknowledgements

We thank Itrat Rubab for her help running some of the experiments. This work has received funding from the European Research Coun- cil (ERC) under the EU’s Horizon 2020 research and innovation programme (project CHAMELEON, Grant no. 682080), from the European Union MSCA-ITN programme (project DyViTo, Grant no. 765121), from the Spanish Ministry of Economy and Competitive- ness (projects TIN2016-78753-P, and PID2019-105004GB-I00), and from the Government of Aragon (G&I Lab reference group). Ana Serrano was additionally supported by the Max Planck Institute for Informatics (Lise Meitner Postdoctoral Fellowship).