News

- November, 2016: Paper and supplementary materials available (see Downloads).

- November, 2016: Web launched.

Abstract

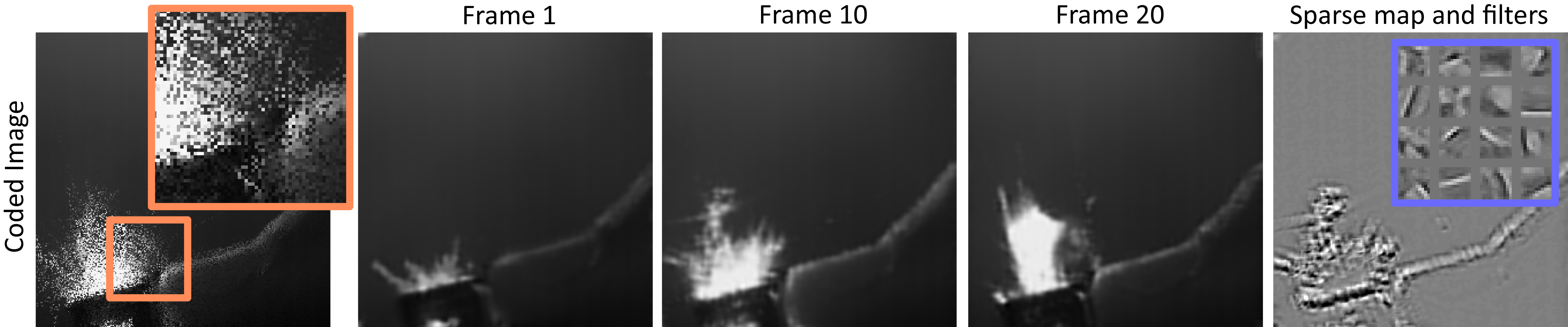

Video capture is limited by the trade-off between spatial and temporal resolution: when capturing videos of high temporal resolution, the spatial resolution decreases due to bandwidth limitations in the capture system. Achieving both high spatial and temporal resolution is only possible with highly specialized and very expensive hardware, and even then the same basic trade-off remains. The recent introduction of compressive sensing and sparse reconstruction techniques allows for the capture of single-shot high-speed video, by coding the temporal information in a single frame, and then reconstructing the full video sequence from this single coded image and a trained dictionary of image patches. In this paper, we first analyze this approach, and find insights that help improve the quality of the reconstructed videos. We then introduce a novel technique, based on convolutional sparse coding (CSC), and show how it outperforms the state-of-the-art, patch-based approach in terms of flexibility and efficiency, due to the convolutional nature of its filter banks. The key idea for CSC high-speed video acquisition is extending the basic formulation by imposing an additional constraint in the temporal dimension, which enforces sparsity of the first-order derivatives over time.

Downloads

- Paper [12.5 MB]

- Supplementary material [ZIP, 38 MB]

- Code with examples [RAR, 7GB]

- High-speed video dataset (1000 fps, captured with a Photron SA2) [Shared folder]

The code and dataset provided are property of Universidad de Zaragoza - free for non-commercial purposes

Bibtex

Related

- 2016: Convolutional Sparse Coding for High Dynamic Range Imaging

- 2015: Compressive High-Speed Video Acquisition

Acknowledgements

The authors would like to thank the Laser & Optical Technologies department from the Aragon Institute of Engineering Research (I3A), as well as the Universidad Rey Juan Carlos for providing a highspeed camera and some of the videos used in this paper. This research has been partially funded by an ERC Consolidator Grant (project CHAMELEON), and the Spanish Ministry of Economy and Competitiveness (projects LIGHTSLICE, LIGHTSPEED, BLINK, and IMAGER). Ana Serrano was supported by an FPI grant from the Spanish Ministry of Economy and Competitiveness; Elena Garces was partially supported by a grant from Gobierno de Aragon; Diego Gutierrez was additionally funded by a Google Faculty Research Award, and the BBVA Foundation; and Belen Masia was partially supported by the Max Planck Center for Visual Computing and Communication.